On December 6th, Advanced Micro Devices (AMD) marked a pivotal moment in its five-year history with the launch of the highly anticipated MI300 series products in San Jose, California. This release positions AMD for a direct competition with NVIDIA in the dynamic field of artificial intelligence accelerators.

AMD introduces two groundbreaking variants: MI300X, an advanced graphics processing unit (GPU) tailored for artificial intelligence computations, and MI300A, seamlessly integrating graphics processing capabilities with a standard central processing unit (CPU), catering to artificial intelligence and scientific research applications.

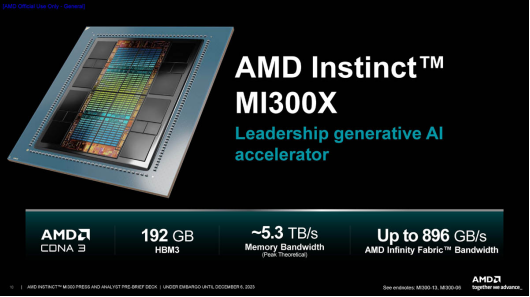

MI300X, utilizing a cutting-edge 5nm process, features up to 8 XCD cores, 304 compute units based on CDNA3 architecture, 8 HBM3 cores, an impressive memory capacity of 192GB, a memory bandwidth of 5.3TB/s, and an Infinity Fabric bus bandwidth of 896GB/s. With over 150 billion transistors, MI300X outperforms NVIDIA's leading H100, boasting 2.4 times the memory density and 1.6 times the memory bandwidth. According to Su Zifeng, this new chip is comparable to NVIDIA's H100 in training artificial intelligence software and excels in inference tasks.

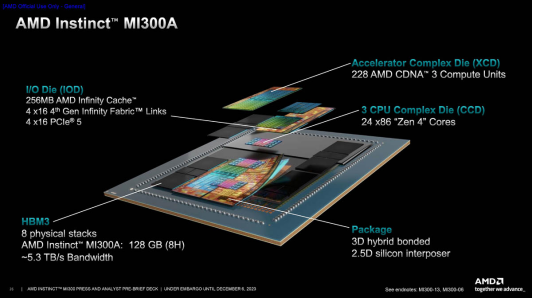

Simultaneously, MI300A integrates Zen3 CPU and CDNA3 GPU on a single chip, utilizing unified HBM3 memory and the 4th generation Infinity Fabric high-speed bus for streamlined architecture and enhanced programming convenience. MI300A boasts 228 CDNA3 architecture compute cores, 24 Zen4 architecture X86 cores, 4 IO Die, 8 HBM3, 128GB of memory, a peak bandwidth of 5.3TB, 256MB of Infinity Cache, and a 3.5D packaging form. Leveraging these advantages, MI300A's performance in running OpenFOAM surpasses that of the H100 by a remarkable four times.

In the competitive landscape of the artificial intelligence chip market, NVIDIA has long held a dominant position, with its H100 chip leading the sector. NVIDIA recently reported quarterly AI chip sales of approximately $14.5 billion, exceeding the previous year's $3.8 billion and contributing to its valuation exceeding $1 trillion.

Beyond AMD, several tech giants have sought to challenge NVIDIA. Intel has introduced its own suite of artificial intelligence chips, while Amazon, Google, and Microsoft are actively developing their proprietary AI chips. However, NVIDIA continues its innovative strides, unveiling the top-tier AI chip HGX H200 last month, featuring a 1.4 times increase in memory bandwidth and 1.8 times increase in memory capacity, enhancing its capability to handle intensive generative artificial intelligence workloads.

AMD emphasizes that systems equipped with its new chips perform on par with NVIDIA's top-tier systems based on the H100 in creating complex AI tools, while exhibiting faster response times in generating responses for large language systems. AMD underscores its provision of ample computer memory, capable of handling increasingly large AI systems.

In another strategic move to challenge NVIDIA, AMD introduces the ROCm 6 software platform, providing robust competition to NVIDIA's proprietary CUDA platform. "Software is the driving factor," notes Su Zifeng.

AMD anticipates that the MI300 series will gain favor among tech giants, potentially yielding billions of dollars in revenue. According to AMD's latest financial report, driven by substantial demand for computing power, the company expects AI chip revenue to reach $400 million in the fourth quarter, with projections exceeding $2 billion next year.

Microsoft CEO Satya Nadella announced last month that the company's Microsoft Azure services would be the first to offer AMD's new MI300X AI chip. Additionally, AMD reveals that customers using these processors include Oracle and Meta, with OpenAI integrating AMD's new chips into the latest version of Triton AI software.

In a post-event interview, Su Zifeng expresses optimism about the development of the AI chip industry, projecting that the AI chip market could grow to $400 billion by 2027. This estimate significantly exceeds other predictions, with research firm Gartner forecasting the AI chip market to reach around $119 billion in 2027, surpassing the approximately $53 billion estimated for this year.