On March 18th, at the GPU Technology Conference GTC 2024, NVIDIA announced its latest generation GPU, the Blackwell, designed to cater to the needs of electronic components distributors. The Blackwell GPU is touted to offer better AI training performance and energy efficiency compared to its popular predecessor, Hopper.

The Blackwell series comprises two main models: the B200 and the GB200, which consists of two B200 chips combined with a Grace CPU.

· B200: 208 billion transistors, boasting up to 40 petaflops of FP4 compute power.

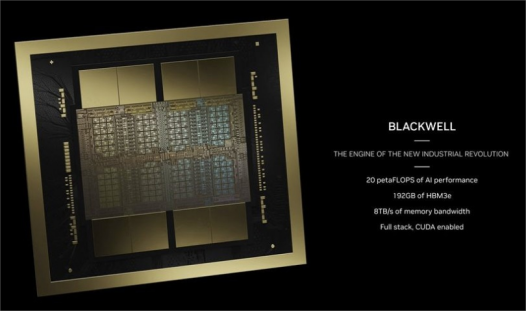

The Blackwell B200, with over 208 billion transistors, more than doubles the company's previous chip's 800 billion transistors count. This significant increase allows for enhanced production efficiency, delivering up to 20 petaFLOPS of FP4 compute power. According to NVIDIA, the GB200, composed of two B200 chips, showcased performance seven times that of the H100 and a fourfold increase in training speed in benchmark tests based on a GPT-3 model with 175 billion parameters.

While exact dimensions of the B200 chip haven't been disclosed by NVIDIA, from revealed photos, it appears that the B200 will utilize two full reticle-sized chips, each surrounded by four HMB3e stacks, with each stack providing 24GB of memory and a bandwidth of 1TB/s on a 1024-bit interface.

It's noteworthy that the H100 employs six HBM3 stacks, each with 16GB (increased to six 24GB stacks in H200), indicating a considerable portion of the H100 chip is dedicated to six HBM memory controllers. In contrast, the B200 reduces the number of HBM memory controller interfaces to four per chip and connects two chips together, thereby reducing the chip area required for HBM memory controllers and allowing more transistors to be allocated for computation.

NVIDIA states that the new GPU will power artificial intelligence developed by major players such as OpenAI, Microsoft, Google, and other participants in the AI race. The Blackwell B200 will utilize TSMC's 4NP process technology, an enhanced version of the 4N process used in the existing Hopper H100 and Ada Lovelace architecture GPUs.

· Powerful AI Chip GB200

NVIDIA also introduced the GB200 super chip, which is based on two B200 GPUs combined with a Grace CPU. This configuration enables the theoretical compute power of the GB200 super chip to reach 40 petaflops, with a configurable TDP of up to 2700W for the entire super chip.

Including two Blackwell GPUs and a Grace CPU based on Arm architecture, the B200 achieves a 30-fold increase in inference model performance compared to the H100, while reducing costs and energy consumption to 1/25 of the original.

In addition to the GB200 super chip, NVIDIA introduced the server-oriented solution HGX B200, which is based on eight B200 GPUs and one x86 CPU (possibly two CPUs) in a single server node. With a TDP configuration of 1000W per B200 GPU, the GPU can deliver up to 18 petaflops of FP4 throughput, making it 10% slower than the GPUs in the GB200 on paper.

Furthermore, there's the HGX B100, sharing the basic architecture of the HGX B200 with one x86 CPU and eight B100 GPUs. However, it's designed to be compatible with existing HGX H100 infrastructure, allowing for the fastest deployment of Blackwell GPUs. Consequently, the TDP for each GPU is limited to 700W, matching the H100, and the throughput decreases to 14 petaflops of FP4 per GPU.

It's worth noting that in these three chips, the bandwidth of each GPU's HBM3e appears to be 8 TB/s. Therefore, differences may lie in power, GPU core clock, and possibly core count. However, NVIDIA has not disclosed any details regarding the number of CUDA cores or streaming multiprocessors in the Blackwell GPUs.